The BERT algorithm is a machine learning algorithm that helps the Google search engine understand what words in a sentence mean. BERT pays particular attention to the nuances of context.

BERT began rolling out in October 2019.

What Is the Google BERT Algorithm?

Google has said that BERT is the biggest advance in search algorithms in the past five years, and “one of the biggest leaps forward in the history of Search”.

BERT stands for ‘Bidirectional Encoder Representations from Transformers’. It’s a deep learning algorithm that uses natural language processing (NLP). It analyzes natural language processes such as:

- entity recognition

- part of speech tagging

- question-answering.

What Does the BERT Algorithm Do?

The first thing to note is that unlike previous updates such as Panda and Penguin, BERT is not intended to penalize websites.

Rather, it helps Google understand searcher intent. In fact, the focus of BERT is not on content but on understanding search queries.

BERT helps Google better understand the meaning of words in a search query by paying attention to context and the order of words in a search query.

BERT is Bi-directional

Unlike previous language algorithms, BERT uses a bidirectional model, which allows it to better understand the context provided by surrounding words. Rani Horev explains it as follows:

As opposed to directional models, which read the text input sequentially (left-to-right or right-to-left), the Transformer encoder reads the entire sequence of words at once. Therefore it is considered bidirectional, though it would be more accurate to say that it’s non-directional. This characteristic allows the model to learn the context of a word based on all of its surroundings (left and right of the word).

– Rani Horev// Towards Data Science

Whereas previous algorithms tended to treat queries as a ‘bag of words’ with no particular order, the Google BERT algorithm looks very closely at the order of words in the query.

This helps it to understand the meaning of the query.

BERT and ‘stop words’

If you’ve been optimizing your web pages for a while, you’ve probably come across the notion that ‘stop words’ are unimportant in SEO. Stop words include prepositional words like “to” and “from”.

With BERT, stop words have suddenly become very important. Instead of discarding them from its analysis of what a search query means, BERT will now be looking very closely at prepositional words and where they appear in a search query.

Google gives the following example:

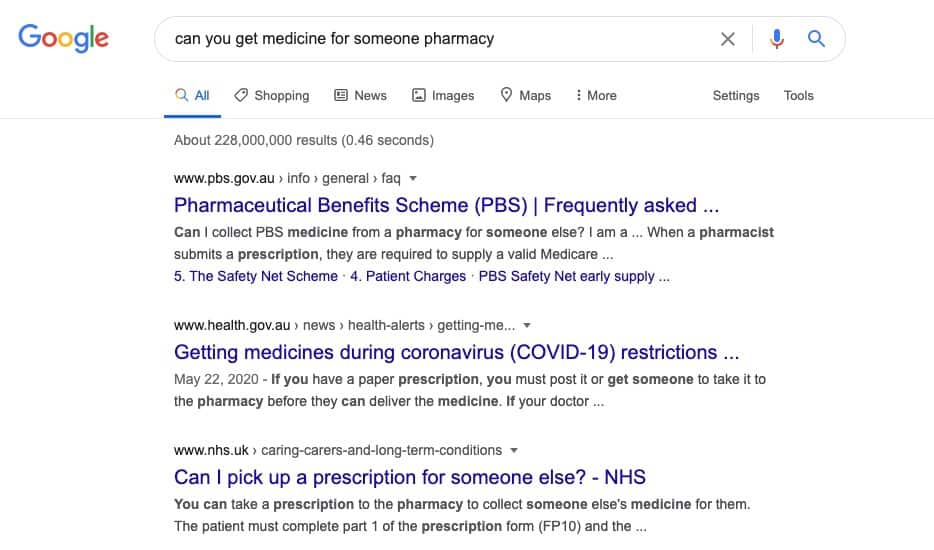

Before BERT the query: “Can you get medicine for someone pharmacy” would have resulted in general information about filling prescriptions at pharmacies.

But the BERT algorithm pays particular attention to the preposition “for”. Google now understands that you’re asking if a person can pick up someone else’s prescription from a pharmacy. And it’s all because of that little preposition “for”.

Here’s another example:

Before BERT the search query “2019 Brazil traveler to usa need a visa” would have resulted in information for USA citizens traveling to Brazil. Google would have ignored the word “to”.

But with BERT, Google understands that the searcher wants information about visa requirements for Brazilians traveling to the USA. Again, it’s because of the preposition in the query.

What Impact Will BERT Have on SEO?

Google has said that BERT currently affects 1 in 10 English-language search queries in the US. On the face of it, 10% of all searches seems like a significant impact.

But because BERT comes into play for long tail keywords, rather than head keywords, its effect is probably not being felt at the top end of the market.

The Google BERT algorithm is going to have a big impact on search queries where prepositions are a key to understanding what the searcher is looking for.

This means that BERT will have an impact on two kinds of search query in particular:

- longtail keyword phrases containing five or more words (as opposed to short search queries involving two or three words)

- conversational search queries

Because BERT deals with complex search queries, it’s probably going to make in-depth content more discoverable.

So websites that have long form content such as guides, tutorials, and ‘how-to’ articles stand to gain most from BERT.

Can You Optimize for BERT?

Google’s Danny Sullivan has said there’s nothing to optimize for with BERT:

There’s nothing to optimize for with BERT, nor anything for anyone to be rethinking. The fundamentals of us seeking to reward great content remain unchanged (October 28, 2019)

– DANNY SULLIVAN (SEARCH LIAISON, GOOGLE)

With BERT (as with the RankBrain algorithm) the more natural your content, the better it will rank.

And you can’t optimize for ‘natural’ – the more you attempt to optimize a piece of content, the less natural it becomes.

In fact, the only way you can optimize for the Google BERT algorithm is by taking your focus off the search engines completely and writing for your human audience instead.

Conclusion

The Google BERT algorithm is the latest example of how machine learning is changing the face of organic search.

As we move towards virtual assistants and mobile search, it becomes more and more important for Google to understand conversational search queries.

The BERT algorithm reflects Google’s growing focus on natural language processing (NLP).